PyTorch Lightning framework was built to make deep learning research faster. Why write endless engineering boilerplate? Why limit your training to single GPUs? How can I easily reproduce my old experiments?

Our mission is to minimize engineering cognitive load and maximize efficiency, giving you all the latest AI features and engineering best practices to make your models scale at lightning speed. All Lightning automated features are rigorously tested with every change, reducing the footprints of potential errors.

To make the Lightning experience even more comprehensive, we want to share implementations with the same lightning standards. PyTorch Lightning Bolts is a collection of PyTorch Lightning implementations of popular models that are well tested and optimized for speed on multiple GPUs and TPUs.

In this article, we’ll give you a quick glimpse of the new Lightning Bolts collection and how you can use it to try crazy research ideas with just a few lines of code!

We’re happy to introduce Lightning Bolts, a new community built deep learning research and production toolbox, featuring a collection of well established and SOTA models and components, pre-trained weights, callbacks, loss functions, data sets, and data modules.

Everything is implemented in Lightning and tested (daily), benchmarked, documented, and works on CPUs, TPUs, GPUs, and 16-bit precision.

What separates bolts from all the other libraries out there is that bolts is built by and used by AI researchers. This means every single bolt component is modularized so that it can be easily extended or mixed with arbitrary parts of the rest of the code-base. Bolts models are designed for you to explore new research ideas — just subclass, override, and train!

Here are a few tools available:

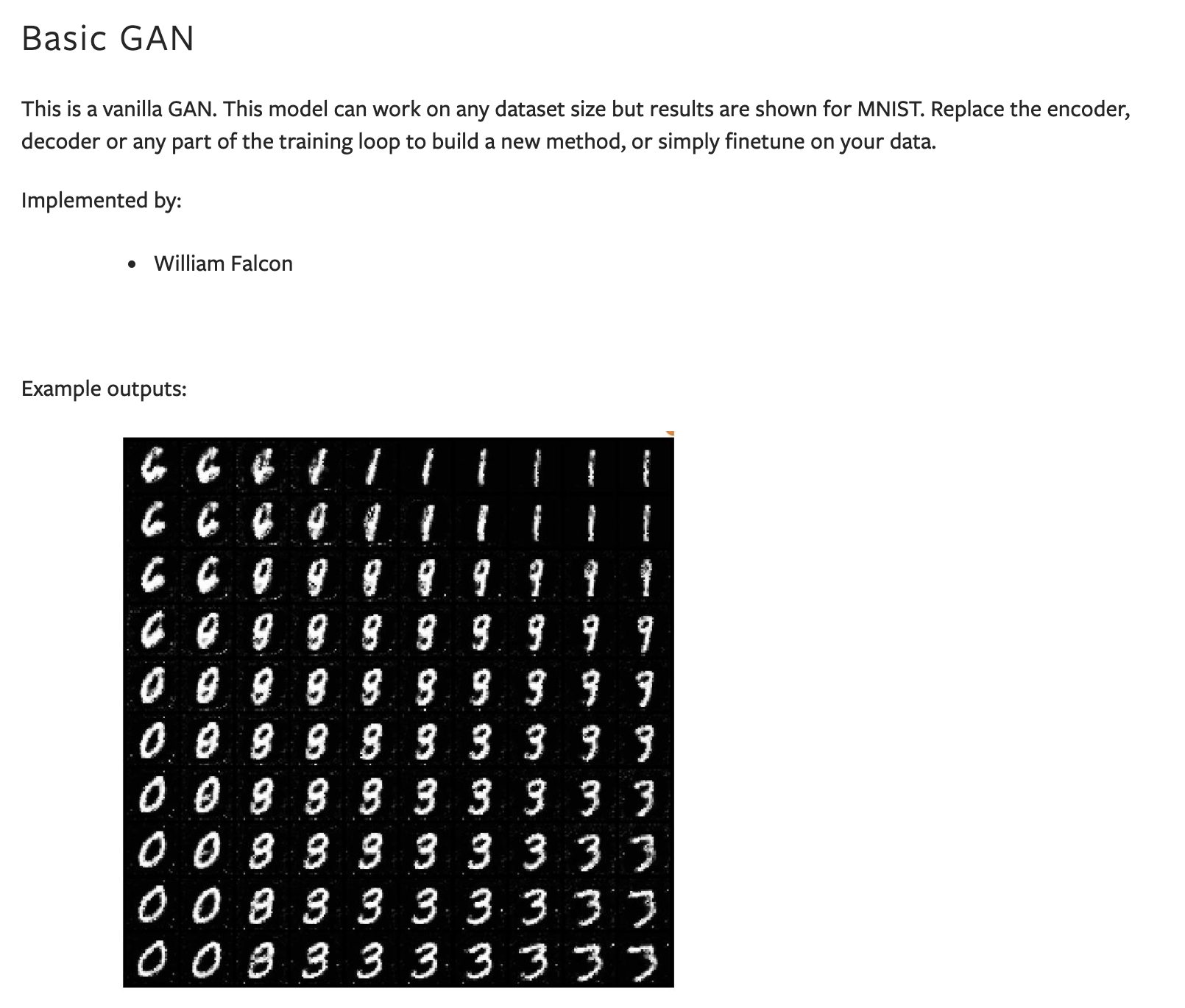

Bolts has rigorously tested and benchmarked baselines. From VAEs to GANs to GPT to self-supervised models — you don’t have to spend months implementing the baselines to try new ideas. Instead, subclass one of ours and try your idea!

You can use Bolts pre-trained weights on most of the standard datasets. This is useful when you don’t have enough data, time, or money to do your own training. It can also be used as a benchmark to improve or test your own model.

For example, you could use a pre-trained VAE to generate features for an image dataset, and compare it to CPC.

Bolts modules are written to be easily extended for research. For example, you can subclass SimCLR and make changes to the NT-Xent loss.

The beauty of Bolts is that it’s easy to plug and play with your Lightning modules or any PyTorch data set. Mix and match data, modules, and components as you please!

model = ImageGPT(datamodule=FashionMNISTDataModule(PATH))

Or pass in any dataset of your choice

model = ImageGPT()

Trainer().fit(model,

train_dataloader=DataLoader(…),

val_dataloader=DataLoader(…)

)

And train on any hardware accelerator, using all of Lightning trainer flags!

import pytorch_lightning as plmodel = ImageGPT(datamodule=FashionMNISTDataModule(PATH))# cpus

pl.Trainer().fit(model)# gpus

pl.Trainer(gpus=8).fit(model)# tpus

pl.Trainer(tpu_cores=8).fit(model)

For more details, read the docs.

Lightning Bolts includes a collection of non-deep learning algorithms that can train on multiple GPUs and TPUs.

Here’s an example running logistic regression on Imagenet in 2 GPUs with 16-bit precision.

This is the summary for the above setup:

Bolts houses a collection of many of the current state-of-the-art self-supervised algorithms.

In the latest paper, “A Framework For Contrastive Self-Supervised Learning And Designing A New Approach” by William Falcon and Kyunghyun Cho, explore and implement many of the latest self-supervised learning algorithms. Those implementations are available in Bolts under the self-supervised learning suite.

You can use Self-supervised learning algorithms to extract image features, to train unlabeled data or mix and match parts of the models to create your own new method:

from pl_bolts.models.self_supervised import CPCV2

from pl_bolts.losses.self_supervised_learning import FeatureMapContrastiveTask

amdim_task = FeatureMapContrastiveTask(comparisons='01, 11, 02', bidirectional=True)

model = CPCV2(contrastive_task=amdim_task)

You can find bolts implementation for

Bolts models and components are built-by the Lightning community. The lightning team guarantees that contributions are:

Want to get your implementation tested on CPUs, GPUs, TPUs, and mixed-precision and help us grow? Consider contributing your model to Bolts (you can even do it from your own repo) to make it available for the Lightning community!

You can find a list of model suggestions in our GitHub issues. Your models don’t have to be state of the art to get added to bolts, just well designed and tested. If you have any ideas, start a discussion on our slack channel.

An open source machine learning framework that accelerates…

Thanks to Justus Schock